GAIDG Lab member, recent graduate, and incoming Masters student (January 2021) has work accepted as a full conference paper on using Convolutional Neural Networks to identify Solfege hand signs in real-time directly from pixels on consumer-level webcams. The work will be presented at the 16th International Symposium on Visual Computing (ISVC) on October 4, 2021.

Abstract

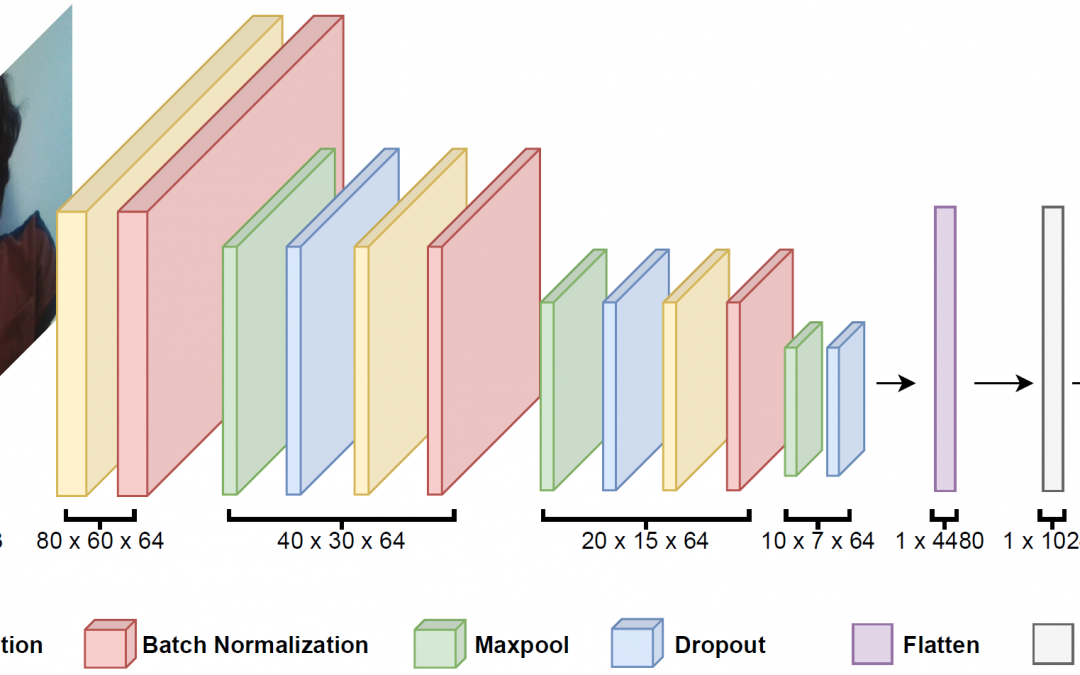

Hand signs have long been a part of elementary music theory education systems through the use of Kodaly-Curwen Solfege hand signs. This paper discusses a deep learning convolutional neural network model that can identify 12 hand signs and the absences of a hand sign directly from pixels both quickly and effectively. Such a model would be useful for automated Solfege assessment in educational environments, as well as, providing a novel human-computer interface for musical expression. A dataset was designed for this study containing 16,900 RGB images. Additional domain-specific image augmentation procedures were designed for this application. The proposed CNN achieves a precision, recall, and F1 score of 94%. We demonstrate the model’s capabilities by simulating a real-time environment.